A collaborative work between IBP and New York University published by PLoS Biology

A paper by Dr. Huan Luo, Dr. Zuxiang Liu (State Key Laboratory of Brain and Cognitive Science at Institute of Biophysics) and Dr. David Poeppel (New York University) – “Auditory cortex tracks both auditory and visual stimulus dynamics using low-frequency neuronal phase modulation” – is now online on the PLoS Biology website. The work recorded Magnetoencephalography (MEG) signals from human subjects viewing audiovisual movie clips and investigated the neural correlates of naturalistic complex sensory stream in real time and neural mechanisms underlying audiovisual integration and cross-sensory temporal binding.

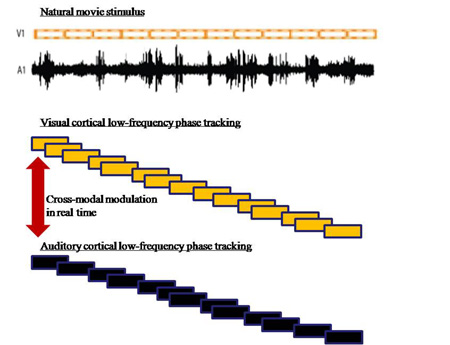

We are living in a world full of dynamic and multisensory natural sensory information (e.g., interactive conversation, movies), and our brains need to coordinate information from multiple sensory systems in real time to create a seamless unified internal representations of the outside world. Unfortunately, we currently have little information about the neuronal mechanisms underlying the dynamic cross-modal processing under natural conditions. In this study, Luo et al. recorded magnetoencephalography (MEG) data from human subjects viewing and listening to short natural movie clips, and found that both auditory and visual cortex not only do within-modality tracking (auditory tracking auditory and visual tracking visual) but also do across-modality tracking (auditory tracking visual and visual tracking auditory). Crucially, continuous cross-modal phase modulation appears to lie at the basis of this integrative cross-modal processing, permitting the internal construction of behaviorally relevant dynamic multisensory scenes. This work suggests possible neural mechanisms underlying one of the most important questions in auditory perception – “Cocktail party problem”. This work has been supported by Ministry of Science and Technology, Chinese Academy of Sciences and Natural Science Foundations.